Stale (no heartbeat) nodes negatively affect performance in Jira Data Center

Platform Notice: Data Center Only - This article only applies to Atlassian apps on the Data Center platform.

Note that this KB was created for the Data Center version of the product. Data Center KBs for non-Data-Center-specific features may also work for Server versions of the product, however they have not been tested. Support for Server* products ended on February 15th 2024. If you are running a Server product, you can visit the Atlassian Server end of support announcement to review your migration options.

*Except Fisheye and Crucible

Summary

The cache replication in Jira Data Center 7.9 and later is asynchronous, which means that cache modifications aren’t replicated to other nodes immediately after they occur, but are instead added to local queues. They are then replicated in the background based on their order in the queue.

Before we can queue cache modifications, we need to create local queues on each of your nodes. Separate queues are created for each node so that modifications are properly grouped and ordered.

When a node is removed from the cluster, all nodes stop delivering cache replication messages to this node. This is true for nodes that were gracefully shut down, as well as the ones that were automatically moved into the OFFLINE state after a period of inactivity.

Messages are being sent to local queues for each node in the ACTIVE state, as well as to those in the NO HEARTBEAT state. This could cause performance degradation relative to the number of nodes in the NO HEARTBEAT state, as the messages are being pushed to an excessive number of local queues.

This problem is largely addressed in Jira by an automation that moves the node to the OFFLINE state if it stays in the NO HEARTBEAT state for more than 2 days (by default). However, if a number of nodes are pushed into the NO HEARTBEAT state within that 2-day window, it can still negatively affect performance.

Solution

Verify performance is impacted by stale nodes

Check for the following symptoms to confirm whether stale nodes are a factor on your Jira instance.

Performance degradation of actions that generate cache update events, scaling with their number. See JRASERVER-69652 for details.

A significant portion of all threads running

replicateToQueuemethod.Large number of undelivered cache replications between two nodes. See HealthCheck: Asynchronous Cache Replication Queues for details.

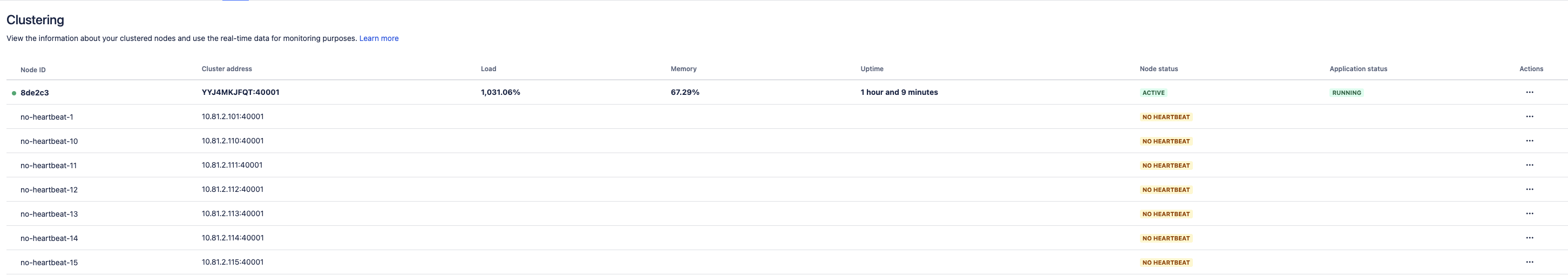

Admins can confirm the number of nodes in the NO HEARTBEAT status on the Administration > System > Clustering page, as seen in the below screenshot:

Gracefully shut down Jira nodes

When a node is removed from a cluster without gracefully shutting down Jira first, that node doesn't have a chance to send an OFFLINE status to the database.

Before you remove a VM or AWS instance from the Data Center cluster:

Shut down Jira by using the

stop-jira.shscript in<JIRA_INSTALL>/binor stopping its service.Then kill the AWS instance or power off the VM.

Update the default retention period

In some scenarios, the two-day time period to automatically move a node from the NO HEARTBEAT state to the OFFLINE state is too long. For example, such scenarios could include deployment with Ansible, AWS/K8s auto-scaling, etc.

Since Jira 8.11, this retention period can be changed from the default two days to a value in hours. It can be done using the system property jira.not.alive.active.nodes.retention.period.in.hours.

If jira.not.alive.active.nodes.retention.period.in.hours is not set, Jira will use the value specified by the jira.cluster.retention.period.in.days. If neither is set, the default value of two days will be used.

The value for the jira.not.alive.active.nodes.retention.period.in.hours should be greater than Jira instance startup time, otherwise other nodes in the cluster could move this node to the OFFLINE state.

Remove stale nodes manually

See Remove abandoned or offline nodes in Jira Data Center for details.

Related tickets:

More information:

Was this helpful?